Some thoughts about HP 3PAR Adaptive Optimization

HP 3PAR Adaptive Optimization (AO) enables autonomic storage tiering on HP 3PAR storage arrays. With this feature the HP 3PAR storage system analyzes IO and then migrates regions of 128 MB between different storage tiers. Frequently accessed regions of volumes are moved to higher tiers, less frequently accessed regions are shifted to lower tiers. I often talk with customers about AO and I know that this feature is sometimes misunderstood and misconfigured. This blog post is a summary of in my opinion important topics.

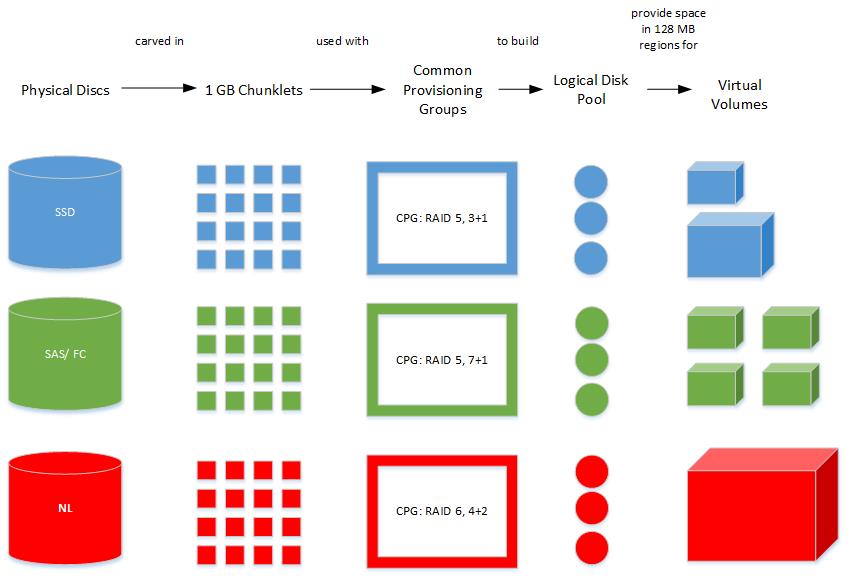

Basis about CPGs, LDs, VVs

A physical disks is divided into 1 GB portions, so called chunklets. A Common Provisioning Group (CPG) creates a pool of logical disks (LD) and therefore a pool of storage, that can be used to create virtual volumes (VV). A CPG defines properties like the device type (SAS/ FC, NL, SSD), disk RPM, RAID level, availability level etc. These properties are used to create LD. A LD is a collection of chunklets arranged in RAID sets. The size of a LD is determined by the number of data chunklets in the RAID set. If a CPG uses RAID 5 (3+1), the LD has about 3 GB. On creation of VV, LDs are created in size of the growth increment (which is usually 32 GB for SAS/ FC and NL, and 8 GB for SSD). So if using RAID 5 (3+1) ~ 11 LDs will be created for a 32 GB growth increment. A VV allocates space in size of 128 MB regions (user and snapshot space) or 32 MB (admin space). Each region is on another LD, so a VV is striped across LDs and therefore across physical disks. I hope this drawing makes it easier to understand.

Patrick Terlisten/ vcloudnine.de/ Creative Commons CC0

If the space of a CPG is nearly fully allocated, space in size of of the growth increment is allocated - more LDs are created. Thin Reclamation can reclaim space from VVs in 16 KB increments, but free VV space is only returned in 128 MB increments to a CPG. A defragment process goes over the LDs and consolidates smaller pages to bigger contiguous regions. With time the LDs can become less efficient in space usage. Due a process called “compacting”, mapped regions of VVs can consolidated to fewer, more utilized LDs. This may free disk space and increases the efficency of space usage. VVs can allocate space from free space on LD, or if no or not enough contiguous free space is available, new LDs are created. Different VV can share the same LD.

Context between Adaptive Optimization and CPG

An Adaptive Optimization (AO) configuration consists, in simple terms, of CPGs, a mode configuration and optional a schedule. An AO config must have configured at least two tiers and can have up to three tiers (tier 0, tier 1 and tier 2). Usually you configure a CPG with SSDs for tier 0, with SAS/ FC disks for tier 1 and with SAS-NL disks for tier 2. But there’s nothing wrong with it, if you configure a SAS/ FC CPG with RAID 1 for tier 1, and a SAS/ FC CPG with RAID 5 for tier 2. Many combinations are possible. It’s important to understand, that tier 1 should meet the performance requirements of your applications. It’s not a good idea to use a “slow” tier 1 and let AO move all data to tier 0, because your workload heat up chunklets. So everytime you create a VV, this should be associated with your tier 1 CPG.

Mode configuration

The tiering analysis algorithm considers three different things:

- available space in tiers

- average latency

- average tier access rate densities

If allocated space in a tier (a CPG) exceed the tier size (or the CPG warning limit), AO will try to move data to other tiers. Busy regions will be moved to faster tiers, more idle regions will be moved to lower tiers. If your tier 0 exceeds the limit, but there’s space left in tier 1, AO will try to move more idle regions from tier 0 to tier 1. If all tiers exceeds their limits, AO will do nothing.

If a higher tier gets to busy, the latency for this tier can become higher than for lower tiers. To prevent this, a region will not be moved to a faster tier, if the latency for the destination tier is higher than for the current tier. An exception is made, if the IOPS load on the destination tier is lower than an internal threshold. Then the region will be moved to the faster tier.

The last point is the hardest and most complex. The average tier access rate densities is considered, if the system is not limited by tier latencies or tier space. It describes how busy the regions in a tier are on average and it’s measured in units of IOPS per gigabyte per minute. Thre results are compared to individual regions. Depending of the result of this comparison, a region is moved to a lower (it’s less busier than other regions) or higher tier (more busy than other regions).

The mode configuration parameter has three different options:

- Performance

- Cost

- Balanced

If it’s set to “Performance” more data is moved to faster tiers. In contrast to this, the “Cost” mode moves more data to lower tiers. The “Balanced” mode balances between performance and costs. This should be the default setting.

Tier configuration

You need to configure at least two tiers. Best practice is to configure three tiers. The fastest CPG should be configured as tier 0, the slowest CPG should be configured as tier 2.

| Configuration | Tier 0 | Tier 1 | Tier 2 |

|---|---|---|---|

| 2-Tier SSD - SAS/ FC | at least 5% of the capacity or min. disk requirement for SSD (8 disks) | 95% of the capacity | none |

| 2-Tier SAS/ FC - NL | none | min. 60% of the capacity100% of the IOPS | max. 40% of the capacity0% of the IOPS |

| 3-Tier SSD - SAS/ FC - NL | at least 5% of the capacity or min. disk requirement for SSD (8 disks) | min. 55% of the capacity | max. 40% of the capacity |

Source: HP 3PAR StoreServ Storage best practices guide, Table 2. Recommended Adaptive Optimization configurations

To ensure that only AO moves data to other tiers, you should use the tier 1 CPG for provisioning VV. No VV should be associated directly with the tier 0 and tier 2 CPG. You should also ensure, that all CPGs that are used in an AO config have the same availability level (Cage, Magazine or Port). If tier 0 and tier 1 have cage availability and tier 2 only magazine availability, the VV will effectively have only magazine availability.

Schedule

You can configure a schedule or you can run AO immediately. If you have multiple AO configs, schedule them all to the same start time. They will run sequential, but the calculation which regions have be moved, is done at the same time. If you check the schedule on the CLI, you will notice another interesting fact:

Lab-3PAR-7200 cli% showsched

------ Schedule ------

SchedName File/Command Min Hour DOM Month DOW CreatedBy Status Alert NextRunTime

Compacting_NL_R6 compactcpg -f NL_r6 0 0 * * 6 3paradm suspended Y --

AO-2-Tier-R5 startao -btsecs -43200 -maxrunh 6 -compact auto 2-Tier-R5 0 22 * * * 3paradm active Y 2014-05-28 22:00:00 CEST

AO-2-Tier-R1 startao -btsecs -43200 -maxrunh 6 -compact auto 2-Tier-R1 0 22 * * * 3paradm active Y 2014-05-28 22:00:00 CEST

----------------------------------------------------------------------------------------------------------------------------------------------------

3 total

Do you notice the -compact in the command line of each AO schedule? If you use AO, you don’t have to schedule “compactcpg” to compact CPGs, that belong to an AO config. This is done as part of AO. Compacting moves regions of less efficient LDs to fewer, higher utilized LDs. You don’t have to run the run AO every hour. It’s sufficient to run it once a day. Run it at periods with low IO. You can exclude the weekend, if your company or customer isn’t working at the weekend.

Other things to consider

If you use AO, you should avoid using automated techniques, that move data between different storage tiers. Yes, if you think of VMware SDRS, that would be such a technique. But only if you use it in fully-automated mode. You can use it in manual mode and apply recommendations if necessary.

Final Words

I don’t say that these are the best practices, but with these topics in mind, it should be easy for you to discuss the requirements of your customer and impacts of different AO settings with your customer. If you take a look into the HP 3PAR StoreServ Storage best practices guide, you will recognize some of the above mentioned practices. But always keep in mind: Even the best practice can miss the customers requirements. So don’t just apply “best practices” without reflecting the impact to the customers requirements.