VMware vSphere Metro Storage Cluster with HP 3PAR Peer Persistence - Part I

The title of this blog post mentions two terms that have to be explained. First, a VMware vSphere Metro Storage Cluster (or VMware vMSC) is a configuration of a VMware vSphere cluster, that is based on a a stretched storage cluster. Secondly, HP 3PAR Peer Persistence adds functionalities to HP 3PAR Remote Copy software and HP 3PAR OS, that two 3PAR storage systems form a nearly continuous storage system. HP 3PAR Peer Persistence allows you, to create a VMware vMSC configuration and to achieve a new quality of availability and reliability.

VMware vSphere Metro Storage Cluster

In a vMSC, server and storage are geographically distributed over short or medium-long distances. vMSC goes far beyond the well-known synchronous mirror between two storage systems. Virtualization hosts and storage belong to the same cluster, but they are geographically dispersed: They are stretched between two sites. This setup allows you to move virtual machines from one site to another (vMotion and Storage vMotion) without downtime (downtime avoidance). With a stretched cluster, technologies such as VMware HA can help to minimize the time of a service outage in case of a disaster (disaster avoidance).

The requirements for a vMSC are:

- Storage connectivity using Fibre Channel/ Fibre Channel over Ethernet (FCoE), NFS or iSCSI

- max. 10 ms round-trip time (RTT) for the ESXi management network (> 10 ms is supported with vSphere Enterprise Plus - Metro vMotion)

- max. 5 ms round-trip time RTT for the synchronous storage replication links

- at leat 250 Mbps per concurrent vMotion on the vMotion network

The complexity of the storage requirements is not the maximum round-trip time - It’s the requirement that a datastore must be accessible from both sites. This means, that a host in Site A must be able to access /read & write) a datastore on a storage in Site B and vice versa. vMSC knows to different methods of host access configuration:

- Uniform host access configuration

- Non-Uniform host access configuration

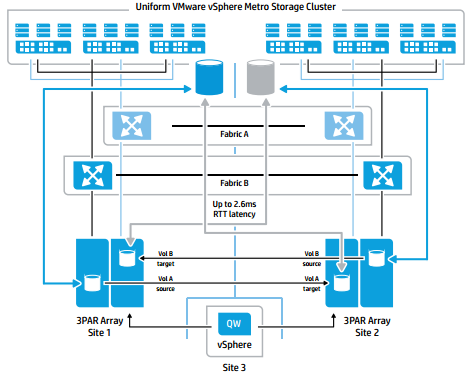

With a uniform host access configuration, the storage on both sides can accessed by all hosts. LUNs from both storage systems are zoned to all hosts and the Fibre-Channel fabric is stretched across the site-links. The following figure was taken from the “Implementing vSphere Metro Storage Cluster using HP 3PAR Peer Persistence” technical whitepaper and shows a typical uniform host access configuration.

HPE/ hpe.com

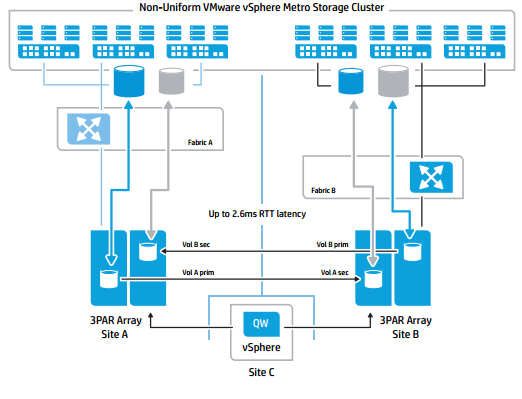

The second possible configuration is non-uniform host access configuration, in which the hosts only access the site-local storage system. The Fibre Channel fabrics are not stretched across the inter-site links. The following figure was taken from the “Implementing vSphere Metro Storage Cluster using HP 3PAR Peer Persistence” technical whitepaper and shows a typical non-uniform host access configuration. If a storage system fails, the ESXi in a datacenter will lose the connectivity and the virtual machine will fail. VMware HA will take care that the VM is restarted in other datacenter.

HPE/ hpe.com

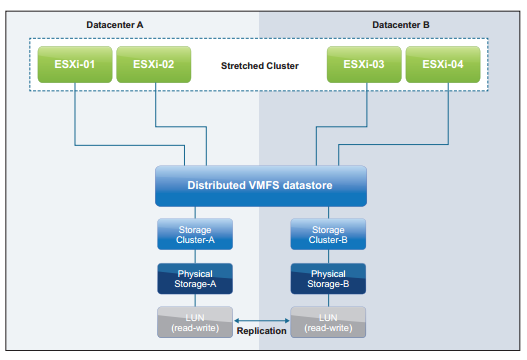

Another possible non-uniform setup uses stretched Fibre Channel fabrics and some kind of virtual LUN. A LUN is mirrored between two storage systems and can be accessed from both sites. The storage systems take care of the consistency of the data. This figure was taken from the “VMware vSphere Metro Storage Cluster Case Study” technical whitepaper.

HPE/ hpe.com

The uniform host access configuration is currently used most frequently.

Regardless of the implementation it’s useful to think about the data locality. Let’s assume, that a host in datacenter A is running a VM, that is housed in a datastore on a storage system in datacenter B. As long as you’re using a stretched fabric between the sites, this is a potential scenario. What happens to the storage I/O of this VM? Right, it will travel across the inter-site links from datacenter A to datacenter B. To avoid this, you can use DRS groups and rules.

Examples for uniform host access configuration are:

| Uniform host access configuration | Non-Uniform host access configuration |

|---|---|

| vSphere 5.x support with NetApp MetroCluster (2031038) | Implementing vSphere Metro Storage Cluster (vMSC) using EMC VPLEX (2007545) |

| Implementing vSphere Metro Storage Cluster (vMSC) using EMC VPLEX (2007545) | |

| Implementing vSphere Metro Storage Cluster using HP 3PAR StoreServ Peer Persistence (2055904) | |

| Implementing vSphere Metro Storage Cluster using HP LeftHand Multi-Site (2020097) | |

| Implementing vSphere Metro Storage Cluster using Hitachi Storage Cluster for VMware vSphere (2073278) | |

| Implementing vSphere Metro Storage Cluster using IBM System Storage SAN Volume Controller (2032346) |

HP 3PAR Remote Copy, Peer Persistence & the Quorum Witness

HP 3PAR Peer Persistence uses synchronous Remote Copy and Asymmetric Logical Unit Access (ALUA) to realize a metro cluster configuration that allows host access from both sides. 3PAR Virtual Volumes (VV) are synchronous mirrored between two 3PAR StoreServs in a Remote Copy 1-to-1 relationship. The relationship may be uni- or bidirectional, which allows the StoreServs to act mutually as a failover system. To create a vMX configuration with HP 3PAR StoreServ storage systems, some requirements have to be fulfilled.

- Firmware on both StoreServ storage systems must be 3.1.2 MU2 or newer (I recommend 3.1.3)

- a remote copy 1-to-1 synchronous relationship

- 2.6 ms or less round-trip time (RTT)

- Quorum Witness VM must run at a 3rd site and must be reachable from each 3PAR StoreServ

- same WWN and LUN ID for each source and target virtual volume

- VMware ESXi 5.0, 5.1 or 5.5

- Hosts must be created with Hostpersona 11

- Hosts must be zoned to both 3PAR StoreServ storage systems (this requires a stretched Fibre Channel fabric between the sites)

- iSCSI or FCoE for host connectivity is supported with 3PAR OS 3.2.1. Versions below 3PAR OS 3.2.1 only support FC for host connectivity with Peer Persistence

- Both 3PAR StoreServ storage systems must be licensed for Remote Copy and Peer Persistence (I recommend to license the Replication Suite)

A VV can be a source or a target volume. Source VV belong to the primary remote copy group, target virtual volumes belong to a secondary remote copy groups. VV are grouped to remote copy groups to ensure I/O consistency. So all VV that require write order consistency should belong to a remote copy group. Even VV that don’t need write order consistency should belong to a remote copy group, just to simplify administration tasks. A typical uniform vMSC configuration with 3PAR StoreServs will have remote copy groups replicating in both directions. So both StoreServs act as source and target in a bi-directional synchronous remote copy relationship. It’s important to understand that the source and target volumes share the same WWN and they are presented using the same LUN ID. The ESXi hosts must use Hostpersona 11. During the process of creating the remote copy groups, the target volumes can be created automatically. This ensures that the source and target volumes use the same WWN. When the volumes from the source and target StoreServ are presented, the paths to the target StoreServ are marked as “Stand by”. In case of a failover the paths will become active and the I/O will continue. The Quorum Witness is a RHEL appliance that communicates with the StoreServs and triggers the failover in some specific scenarios. This table was taken from the “Implementing vSphere Metro Storage Cluster using HP 3PAR Peer Persistence” technical whitepaper. As you can see, the automatic failover is only triggered in one specific scenario.

| Replication stopped | Automatic failover | Host I/O impacted |

|---|---|---|

| Array to Array remote copy links failure | Y | N |

| Single site to Quorum Witness network failure | N | N |

| Single site to Quorum Witness network and Array to Array remote copy link failure | Y | Y |

| Both sites to Quorum Witness network failure | N | N |

| Both sites to Quorum Witness network and Array to Array remote copy link failure | Y | N |

Summary

VMware vSphere Metro Storage Cluster (vMSC) is a special configuration of a stretched compute and storage cluster. A vMSC is usually implemented to avoid downtime. A vMSC configuration makes it possible to move virtual machine, and thus workloads, between sites. Beyond this, vMSC can avoid downtime caused due to a failed storage system. Using HP 3PAR Remote Copy, 3PAR Peer Persistence and the Quorum Witness, two HP 3PAR StoreServ storage systems can form a uniform vMSC configuration. This allows movement of VMs/ workloads between sites and also a transparent failover between storage systems in case of a failure of one of the StoreServs.

Part II of this small series will cover the configuration of Remote Copy and Peer Persistence.